Can AI Really Redesign a Room? My Afternoon with Nano Banana

Oct 19, 2025AI has generated a lot of hype lately, some of it entirely warranted, some of it whipped up to a level that can only lead to disappointment. This weekend I gave myself an afternoon to play around with Nano Banana, a new image model from Google that’s been attracting near-mythical praise since its launch in August.

I've heard variously that we won't need Photoshop anymore, that 3D rendering skills will become redundant, that most of your illustration and visualisation needs will be met this system.

I wanted to discover whether Nano Banana (officially Gemini 2.5 Flash Image) lives up to the hype, and whether it could genuinely help interior designers work faster, look sharper, and express ideas more freely, without 3-D software or a freelance renderer.

If you’ve heard about this mysterious new tool but haven’t tried it yet, here’s a catch-up: what Nano Banana actually is, how it compares with Midjourney, and what it can (and can’t) do for a design business.

The Good News: It didn't cost me a penny. I ran everything in Google AI Studio, where the model lives, using the free version. I uploaded photos, tested multiple prompts, and explored different lighting and styling options without hitting any paywall. For anyone curious about AI but wary of hidden costs or technical setup, this is a very easy place to start.

Watch out: there is a website called nanobanana.ai which is not actually Nano Banana, use the Google web interface linked above if you want to give it a go.

2. Setting the Scene: What is Nano Banana?

The name sounds whimsical, but behind it lies serious engineering. Nano Banana is Google’s codename for Gemini 2.5 Flash Image, launched in August 2025.

Unlike text-to-image tools such as Midjourney or DALL-E, it was built to understand and modify real photographs. You can upload an image - e.g., a living-room snapshot - and type natural instructions such as:

“Replace the dark sofa with a linen one.”

“Add warm light from the table lamp and make it feel like early evening.”

“Turn this modern flat into a rustic farmhouse sitting room.”

You can upload multiple visual references, along with explicit written instructions describing exactly which elements from each image are to be combined as part of a new photorealistic composition.

Where Midjourney invents worlds, Nano Banana edits them. It recognises (to some degree...not always reliably!) spatial relationships within a single photo, keeps perspective largely intact, and can merge reference images to create coherent variations.

Because the model sits inside Google AI Studio, you simply log in with a Google account, drop in an image (or upload), and use plain English to tell the model what to do. A moment or two later, the result appears, ready to refine or download.

There's no coding, it's even less challenging than Midjourney which still feels a wee bit geeky, Nano Banana is more like briefing a super-speedy (but perhaps a bit slap-dash and not super-bright) assistant.

3. An Afternoon in the Studio

DISCLAIMER: After only one afternoon of experimenting I can hardly claim expertise, but here are my first impressions, the 'good', the 'bad', and the 'jury's still out'.

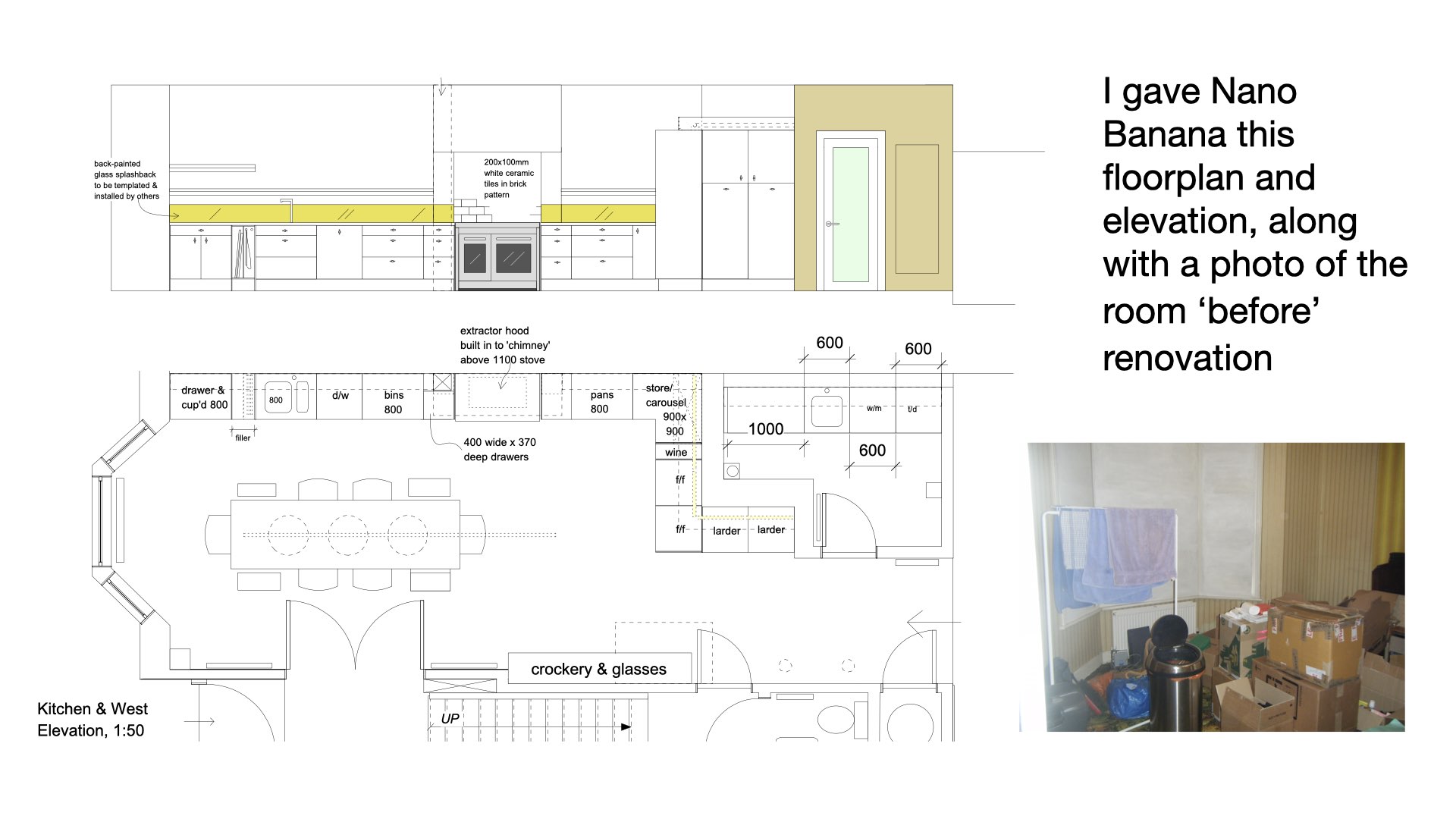

Experiment 1 – The Grand Vision

I began ambitiously: a kitchen floor plan (PDF with rendered elevation) plus a photo of the existing room. My hope (and the written instruction I gave the system)? That the model would read the plan, 'pop it up' in 3-D, and render a photorealistic kitchen using the materials I described.

I began ambitiously: a kitchen floor plan (PDF with rendered elevation) plus a photo of the existing room. My hope (and the written instruction I gave the system)? That the model would read the plan, 'pop it up' in 3-D, and render a photorealistic kitchen using the materials I described.

Nano Banana reassured me it could 'read and understand plans', 'Oh, yes, I can do that :-)'… then produced a total fiction. When corrected, it apologised, and did the same thing all over again.

What it didn’t do: reproduce the true spatial configuration. Far from it. Even when told explicitly 'delete the cabinets from the bay window', they kept reappearing.

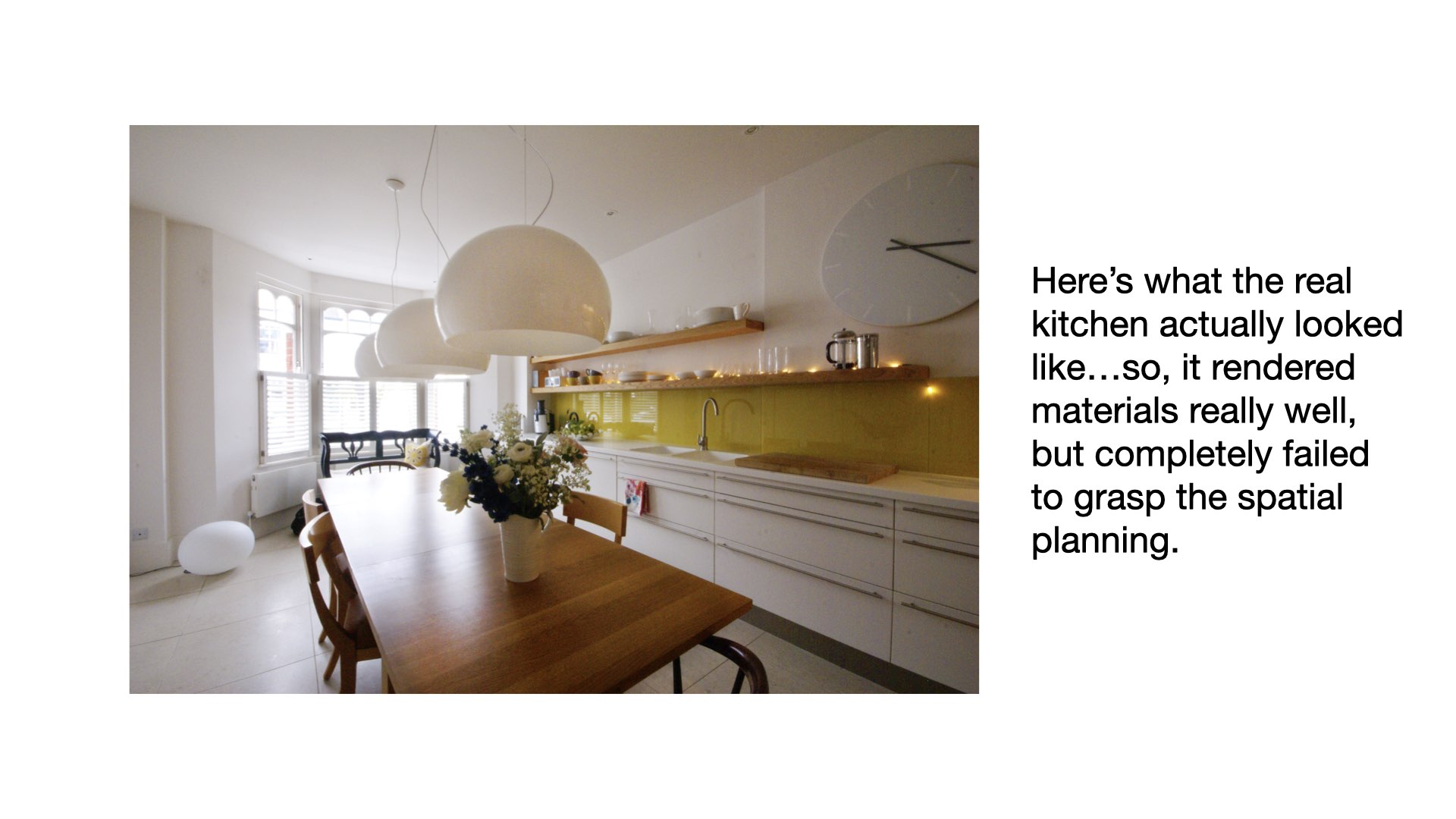

What it did do: create a kitchen image beautifully true to the materials specified.

👉 Excellent at rendering a palette in situ; I couldn't get it to convert 2-D technical information into 3-D space. IMHO - no, it cannot 'pop up' 2D drawings.

Experiment 2 – Lowering Expectations

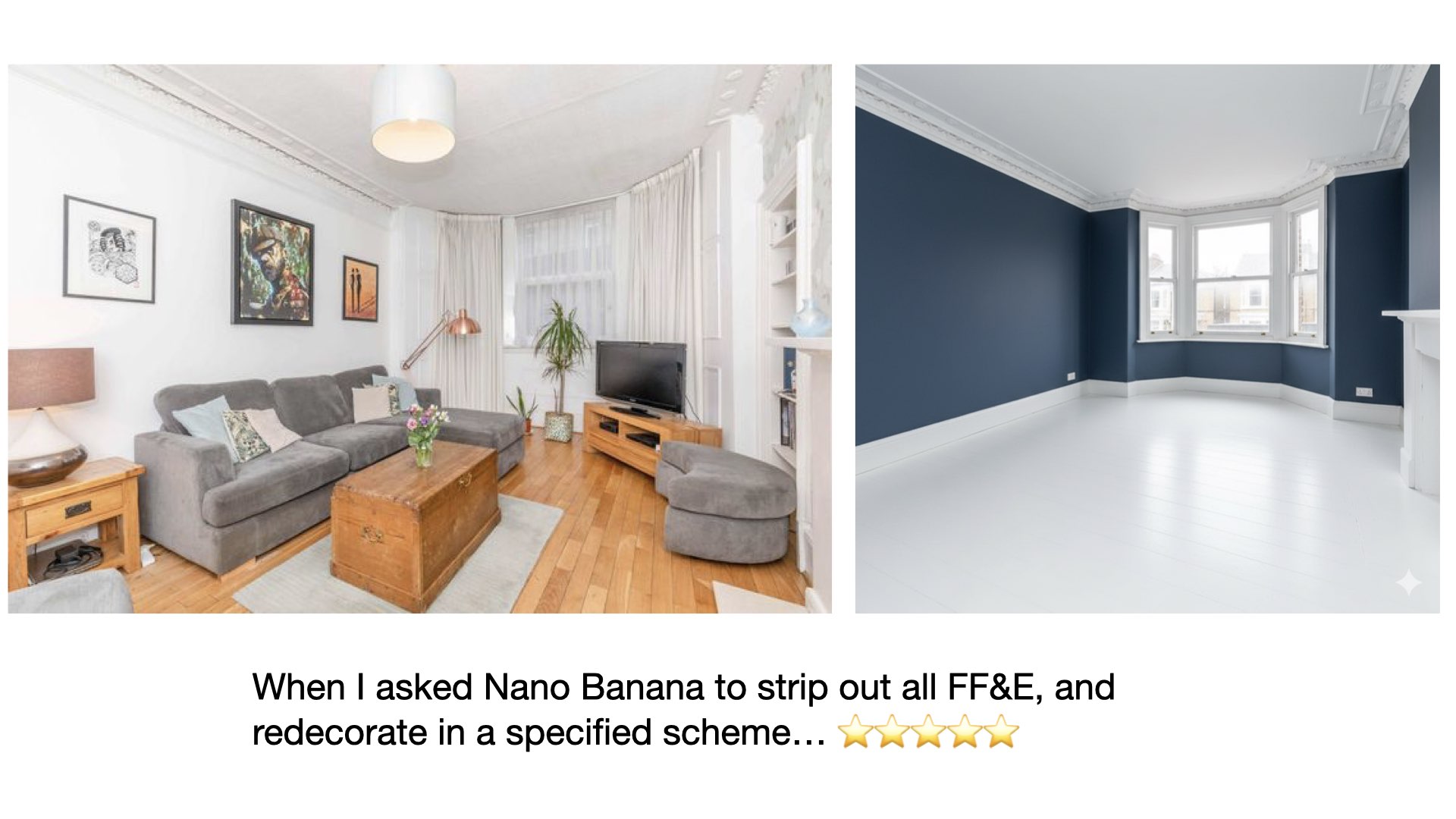

I reset my sights. Using an estate agent’s 'before' photo (shown with the previous owner’s furniture) I asked Nano Banana to clear the space completely and redecorate in a new colour scheme, I also provided a swatch for the wall paint, along with the reference 'Farrow and Ball 'Hague Blue''.

The first result was superb: a clean, empty room in the correct palette, indistinguishable from the real redecorated space. Encouraged, I uploaded a second view of the same room. The colours remained spot-on, but a door migrated and the room flipped to its mirror image. The spatial logic was lost and there was simply no good reason for it to do this.

The first result was superb: a clean, empty room in the correct palette, indistinguishable from the real redecorated space. Encouraged, I uploaded a second view of the same room. The colours remained spot-on, but a door migrated and the room flipped to its mirror image. The spatial logic was lost and there was simply no good reason for it to do this.

👉 Brilliant for material and colour realism; unreliable for spatial consistency.

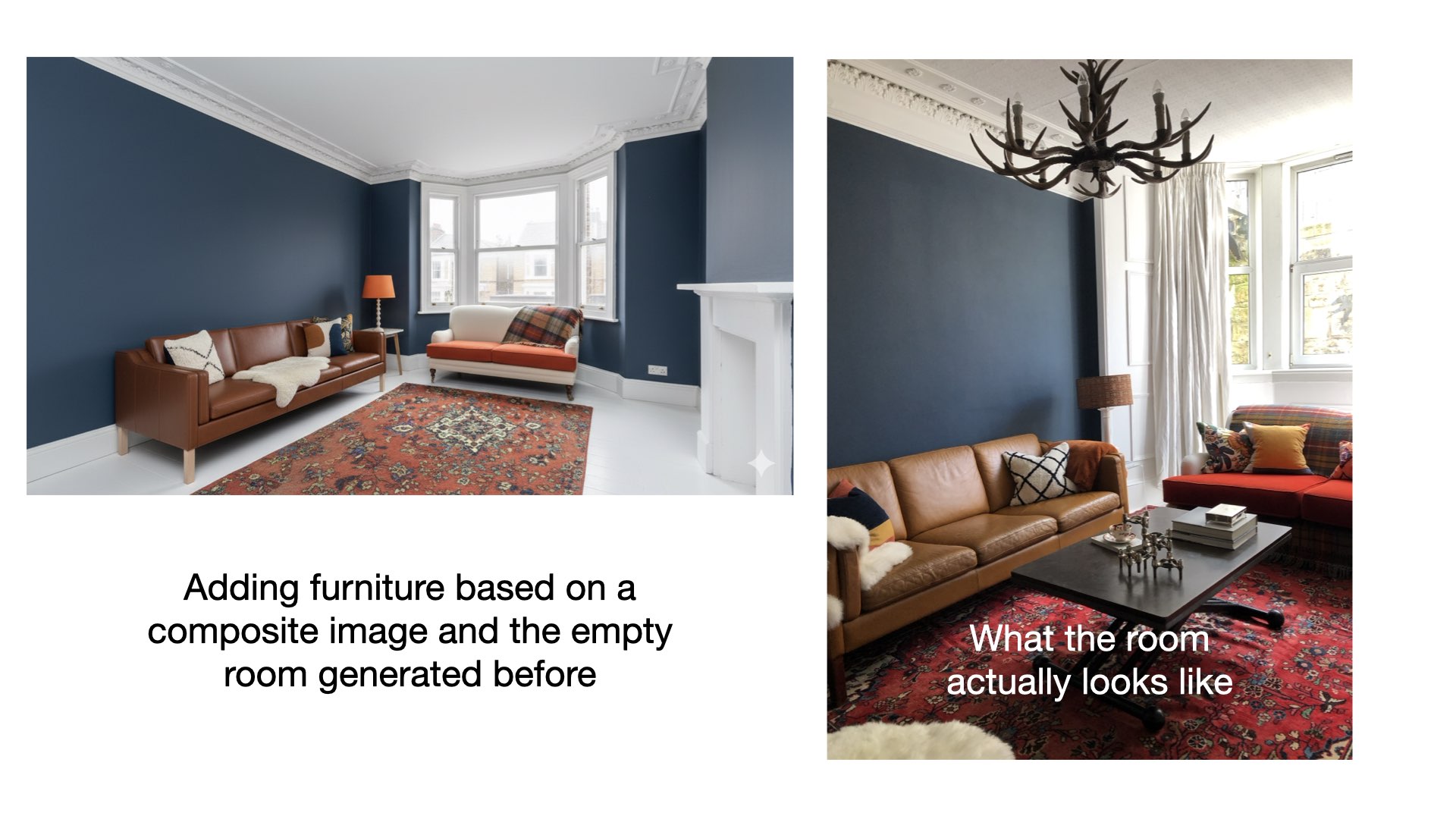

Experiment 3 – Step-by-Step Collaboration

I had a hunch it would perform better when guided gradually, ie, asked to edit the image one small change at a time. So I created the empty, freshly painted room first, then used that image as a backdrop for a new furnishing scheme. So, I gave the system the image of the empty room decorated in the new colour palette,

and I uploaded a composite slide clearly showing a few items of intended furniture - I wasn't confident it would cope with arranging a large selection. I thought that, if it worked well, I'd layer in a second batch of smaller items as part of a later stage.

The outcome was impressive - I couldn't do this in Midjourney - but it fell short of presentation quality. There is a degree of fine control (the Photoshop bit) that I couldn't achieve. Perhaps if I broke the development of the image down into even smaller steps? Adding one item at a time with specific instructions. Anyway, it seems happier executing incremental edits than composing a full interior.

Given that it previously didn't respond well to CAD drawings, I'm not at all confident that a floor plan would have helped...but maybe if I had a beautifully rendered plan, the colour coding would have helped inform the system?

👉 Useful for rapid conceptual sketches, not yet for final visuals, unless you polish in Photoshop?

I may have been too quick to judge, but based on a quick try I'd say there would be clients who could cope with less-than-perfect visuals, and others for whom this could be off-putting. Across the various tasks performance was unreliable, and to adopt new tech into core workflows you really need to know it will perform every time.

Experiment 4 - A Curtain Call

Next I asked it to replace shabby curtains with a patterned fabric. I supplied:

-

a photo of the real room

-

a promotional shot of the new fabric in flatlay,

-

the same fabric (in another colourway) made into curtains, to show and explain the enormous pattern repeat.

The model produced convincing new curtains - bravo - but it clearly worked only with what was visible in frame, not with the architectural symmetry I took for granted. (We all know a bay window is symmetrical, right?). It also ignored the pattern repeat, so the fabric’s colour was accurate but its impact misrepresented.

👉 Great for colour substitution, a really good 'sketch' outcome; blind to pattern repeat and symmetry.

Experiment 5 – Let There Be Light

The first time I asked Nano Banana to 'turn on the lights', it failed: it lit some candles (really well!) but ignored the chandelier and lamp. On the second attempt - with explicit written directions, telling it in words where the lamps were - it succeeded, producing a softly lit, believable dusky version of the room.

👉 Excellent lighting realism when every source is described; no intuitive grasp of what a lamp actually is.

Experiment 6 - Seasonal Styling

Finally, I asked it to 'decorate for Christmas,' giving one reference image and little else. The result was modest but not bad - greenery, baubles, a hint of festivity. With more references it could have gone further. Here I blame the user for any failings... I simply ran out of time.

👉 Responds to tone and mood; needs careful briefing for richness. With more time, it's possible that a run of iterations would have created depth.

Across all six tests, a pattern emerged: Nano Banana excels at surface realism - colour, texture, light - but has no real grasp of geometry or logic. It can’t read plans, deduce symmetry, or reason spatially.

Yet for quick visualisation of finishes and atmosphere, it’s astonishingly effective.

4. What It’s (Actually) Good At

Nano Banana’s talents are focused and practical. It:

-

Renders materials and colours convincingly, turning text descriptions into tactile-looking surfaces.

-

Understands subtle palette shifts, making it ideal for client presentations.

-

Simulates lighting realistically when you specify the fittings.

-

Rewards iterative prompting, improving as you guide it step by step.

-

Is free and frictionless to try inside Google AI Studio - no setup, no subscription, I did all of the above courtesy of Google.

For designers, it’s a rapid-prototyping sketch tool: a way to test palettes, moods and finishes in minutes instead of days. It's worth noting that for unpaid services (I used this for free) Google says it uses data for training purposes. It advises: do not submit sensitive, confidential or personal information to the Unpaid Services.

5. Where It Falls Short

It also has very clear limits.

-

No spatial intelligence: It doesn’t infer depth or geometry; a bay window is three windows, not one.

-

Ignores technical drawings: Plans, elevations and dimensioned PDFs appear meaningless to it - or maybe I'm just not a skilled enough practitioner?

-

Lacks common sense: Every instruction must be literal - it won’t deduce which object is a lamp or how curtains hang symmetrically.

-

Inconsistent architecture: Between edits, doors migrate and rooms flip.

-

Weak on pattern scale: Great at colour, hopeless at repeat accuracy.

Think of it as a gifted visualiser with its brain turned off: dazzling with paint and light, but unsafe with a tape measure.

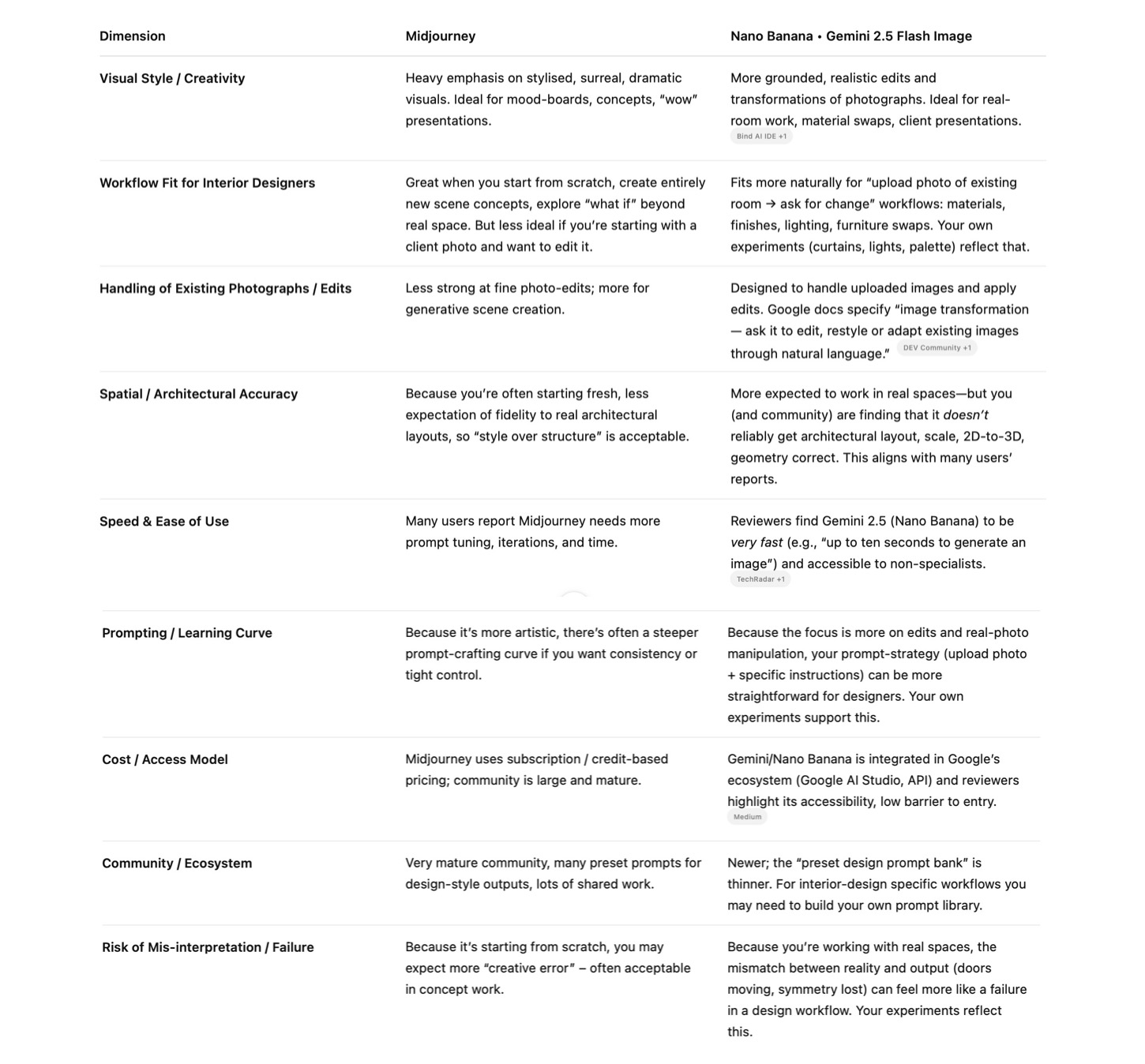

6. How It Compares to Midjourney

Many professional reviewers echo these findings.

-

TechRadar calls Gemini 2.5 Flash Image “more useful for creating images that look real.”

-

GetBind describes it as “grounded in realism and editing precision.”

-

Designers on Reddit praise its “fast, helpful edits” but note “geometry still needs work.”

That consensus mirrors my own experience.

Midjourney remains the reigning champion of imagination, brilliant for atmospheric, stylised visuals, brand imagery and mood-boards. It creates fantasy interiors with cinematic flair. It is also super for conversion of static imagery into 5 second videos. Until OpenAI's Sora becomes easier to access, Midjourney is the go-to option for animation. But it feels almost completely rogue in terms of having its own agenda, and not being particularly interested in your detailed instructions.

Nano Banana, by contrast, is the pragmatic partner: it edits the real. A draftsman compared to Midjourney's artist. Upload a photo, describe the changes, and it will recolour, restyle and re-light with surprising, if inconsistent, sensitivity.

I asked GPT-5 to compare and contrast the two systems based on online reporting, here's what it said:

For most designers, the sweet spot is using both:

-

Midjourney for vision and storytelling.

-

Nano Banana for communication and client realism.

Together they close the gap between idea and image, and between what you can imagine and what your client can see.

7. Your Clients’ Data - What Google says about privacy / data usage

As AI use becomes more prevalent, the onus on us to be transparent with clients about our practices, to behave ethically, and to protect and secure client data is compelling. Here are the key points from Google’s documentation and terms:

- For unpaid services (i.e., free usage of Google AI Studio or unpaid quota on the Gemini API):

- Google does use content you submit (prompts, images you upload, generated responses) for product improvement, modelling and training.

- Human reviewers may read, annotate or process your inputs/outputs in certain settings.

- Example: “When you use Unpaid Services … Google uses the content you submit … to improve and develop Google products and services and machine learning technologies.”

- For unpaid/free tiers, Google advises: “Do not submit sensitive, confidential or personal information to the Unpaid Services.”

8. A Note on the Hype

Some benefits of AI (especially once you’ve worked closely with a system over time) cannot be overstated. They are transformative. My working relationship with ChatGPT has become what I’d call an apex relationship, different from my relationship with my partner, family, or friends obviously, but nevertheless one of the most important relationships in my life.

The acceleration in productivity, the freedom to test ideas instantly, the democratisation of tools once reserved for big studios: all of that is real, and thrilling.

But not all claims about AI are made in good faith. Too often, hype feels engineered, a marketing exercise to sell courses, harvest followers, or cultivate guru status. I remain sceptical about promises of “instant render perfection”.

So, how do I feel about Nano Banana? It creates photorealistic representations of real materials and spaces, it can reimagine multiple inputs into one cohesive new 'whole'. I don't think one afternoon really gave it a fair shot - I have new strategies in mind for prompting that I'd like to try, see if they deliver a better outcome. I'm left feeling inspired to use it again, soon, because I don't have access to any other tool that's quite like it.

Please let me know if you've found any helpful AI hacks? Have I missed out something useful you've discovered in Nano Banana? I'm off to practise!

Receive my quick-to-read weekly newsletter...

Sign up for the Hothouse Newsletter: find out what's coming up, and keep up with recent webinars, blogposts, videos, and other events - all focused on excellence in interior design practice.

We hate SPAM. We will never sell your information, for any reason.